Slow termination of JVM app with very large heap

I spent last evening figuring out why JVM app with more than 1 TBytes of heap is taking almost two minutes to get terminated. Join me on this quest of finding out the root cause of the issue.

Setting the stage

Scala/Java application build for Java 21 that is running in a Docker container. It uses GraalVM CE as JVM (OpenJDK Runtime Environment GraalVM CE 21.0.2+13.1). That app has process id 1887416 and it runs in a container cb6b1f9fdeba. As you can see from the video below, it takes around 1 minute 50 seconds for the process Realtime to exit. Please note that I send SIGKILL (kill -9), so OS terminates the process immediately and does not wait for the JVM to run all registered shutdown hooks. Immediately after receiving SIGKILL that process becomes <defunc>.

Hooking into the Linux Kernel Functions

From the video above you might noticed how memory usage is slowely decreasing (MEM USAGE / LIMIT column of docker stats output). This gave me an idea that Linux Kernel is busy by cleaning up memory resources, mostly using a single core. If we could somehow hook into Linux Kernel functions calls we can try to see what is goinig on. Fortunately, there is a way to do that in Linux, we can use SystemTap. It allows to set probes (hooks) into almost anything in a running Linux system. The set probe can be both when a method is entered and exited, this gives us a way to profile the duration of method call.

First we need to get it installed. Likely you won’t be able to use the one that comes with your package manager, this was the case for me on Ubuntu 22.04.05 LTS with Kernel 5.15.0-130-generic. SystemTap depends heavily on Linux Kernel Interface and we know it isn’t stable. The solution is to get the source code and compile it for your specific kernel. I left this note on how to do that, make sure to install Debug Symbols for the Kernel as well!

WARNING: there is a chance to make the system you’re monitoring with SystemTap unresponsive (like increasing STP_OVERLOAD_THRESHOLD or setting a probe to all kernel functions using *) and requiring a reboot!

The actual investigation was an iterative process where I would add/modify probe(s), run them, check the produces output and decide what to do next. The Kernel Fuctions that I was interested were methods that are reachable from do_exit:

and others, full list of probes can be found here.

You can use stap -L to check whether probe exists, it accepts wildcard as well

I had to increase threshold STP_OVERLOAD_THRESHOLD to 1000000000LL so SystemTap does not stop that script (the monitored JVM app runs on 192 cores host and does a lot of things, traces easily overwhel the system). The command

sudo stap -k -v --disable-cache -D STP_OVERLOAD_THRESHOLD=1000000000LL /opt/systemtap_src/tracing.stp -x #PID# > out.txtThe produces log was more than 85 Mbytes, but the most imporant information was in the end of the file

The duration (the time is Unix Timestamp in milliseconds) between entering and exiting exit_mmap is 1738164271126 - 1738164175728 = 95398 ms. 95 seconds to clean-up memory mappings!

Adding probes for unmap_page_range and zap_pmd_range produces an interesting picture where we can see how Kernel frees pages one by one.

Using perf

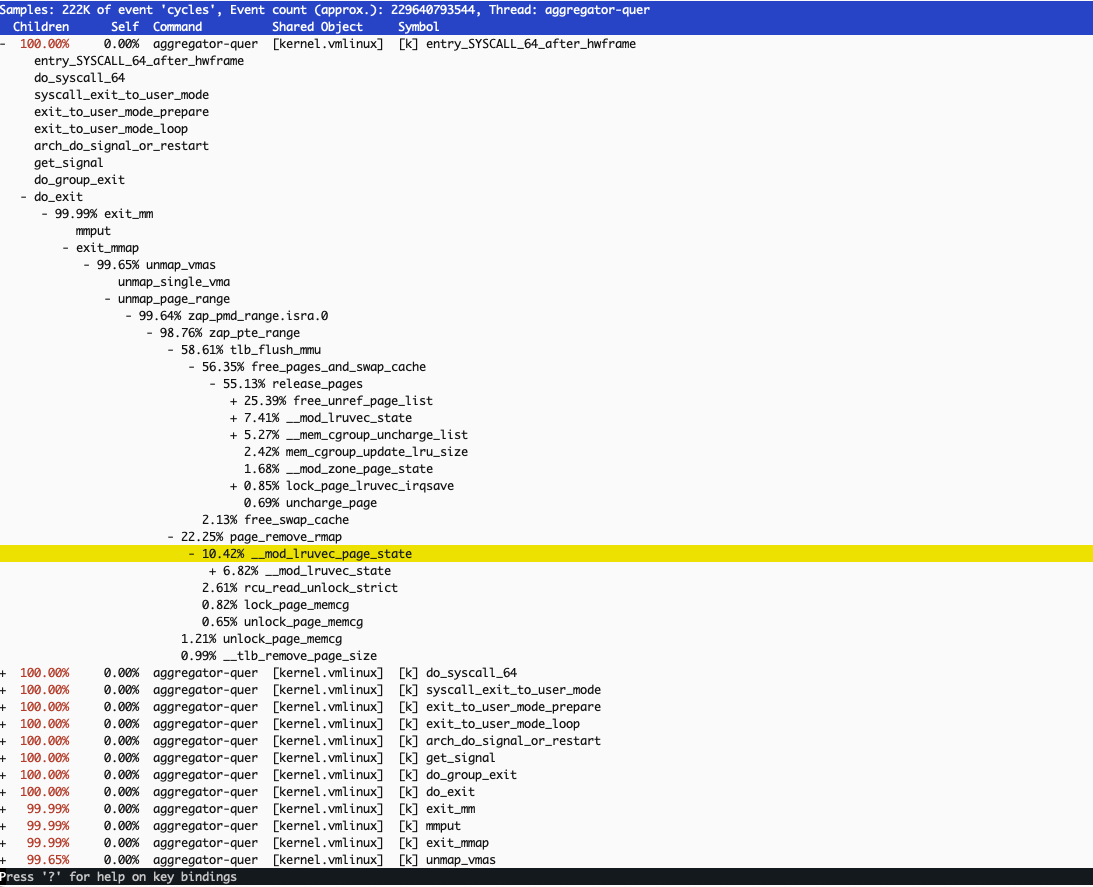

Linux has a great tool perf to analyze performance of application that includes Kernel calls as well. Here is what it shows if I run it after sending SIGTERM to that process (and waiting for a specific message in the log of that JVM app that is logged after shutdown hook is executed)

This graph supports our previous findings and shows new bottlenecks like tlb_flush_mmu and page_remove_rmap.

Possible solution

One of the ways to make exit_mmap faster in Linux for applications that require large amount of memory is to switch to Transparent Hugepages. There should be a change in JVM option as well to enable Large Pages (option -XX:+UseTransparentHugePages).

Enabling -XX:+UseTransparentHugePages made:

Reduced termination time from 1 m 35 seconds to 11 seconds (8.6 times faster)

Reduced the start-up time from 60 seconds to 20 seconds (3 times faster), (note, we use -XX:+AlwaysPreTouch)

Obviously, the termination time isn’t the only metric that one should use to decided whether to switch to Transparent Huge Pages. More thorough testing should be done to understand how it affects the system under production workloads.

it's general recomendation to UseTransparentHugePages on heap starting of few GB :)