Hello, dear reader,

It has been quite sometime since my last post, was busy figuring out how to dad - first baby 😀. Anyways, let start!

Recently I stumbled upon a behavior of Rust app that uses axum web framework that took me some time to understand. Imagine setting up an async handler in Axum, only to find it mysteriously halting mid-execution. Here’s what happened when I encountered this timeout behavior and how to handle it effectively.

Introduction

Imagine simple async handler below that logs, writes something to disk and returns some JSON object:

async fn info_handler() -> errors::Result<Json<Info>> {

info!("Starting executing `info_handler`");

let info = Info {

version: APP_VERSION.to_string(),

current_timestamp: Utc::now(),

};

write_to_disk("data.txt").await?;

info!("Finished executing `info_handler`");

Ok(Json(info))

}Sometime the call to that handler will only log the following

2024-10-26T06:16:54.173790Z INFO tokio-runtime-worker ThreadId(02) axum_timeout_rs: src/main.rs:56: Starting executing `info_handler`and no sign of log Finished executing info_handler at all. The code above is super simplified, actual production code is more complex (for example, it would make calls to S3-like object storage, you see in the logs it started GetObject operation, but never finishes). The answer to this behavior is in the title, timeouts!

Axum provides a flexible way of extending router using middleware well-integrated with tower. ServiceBuilder has a way to configure timeouts, and it fails request if it takes longer than a defined timeout. So far so good, right? (Further reading is going to be a bit technical, get ready!)

Identifying the issue

Failing request sounds too generic. What would happen with those async HTTP calls (S3 calls) or async filesystem operation that are happening? And to understand this better we need to keep in mind that in Rust Future must be polled in order to progress!

Let's model slowness of write to disk operation and show how timeout makes the data written to a file inconsistent (we can overcome this by renaming a file in case if writes succeed, it is atomic and cheap in most filesystems).

The code for Router with timeout , it sets info_handler to process GET requests to /version endpoint, sets handle_timeout_error as error handler and sets timeout for all requests to 1 second:

const TIMEOUT_DURATION: Duration = Duration::from_secs(1);

let route: Router = Router::new().route("/version", get(info_handler))

.layer(ServiceBuilder::new()

.layer(HandleErrorLayer::new(handle_timeout_error))

.timeout(TIMEOUT_DURATION)

.into_inner());And slow method that would be timing out when writing to disk (99 times write to disk current i and sleeps 20 ms after each write)

async fn write_to_disk(path: &str) -> errors::Result<()> {

let mut file = tokio::fs::File::create(path).await?;

for i in 1..100 {

file.write_all(format!("{}\n", i).as_bytes()).await?;

tokio::time::sleep(Duration::from_millis(20)).await;

}

file.flush().await?;

Ok(())

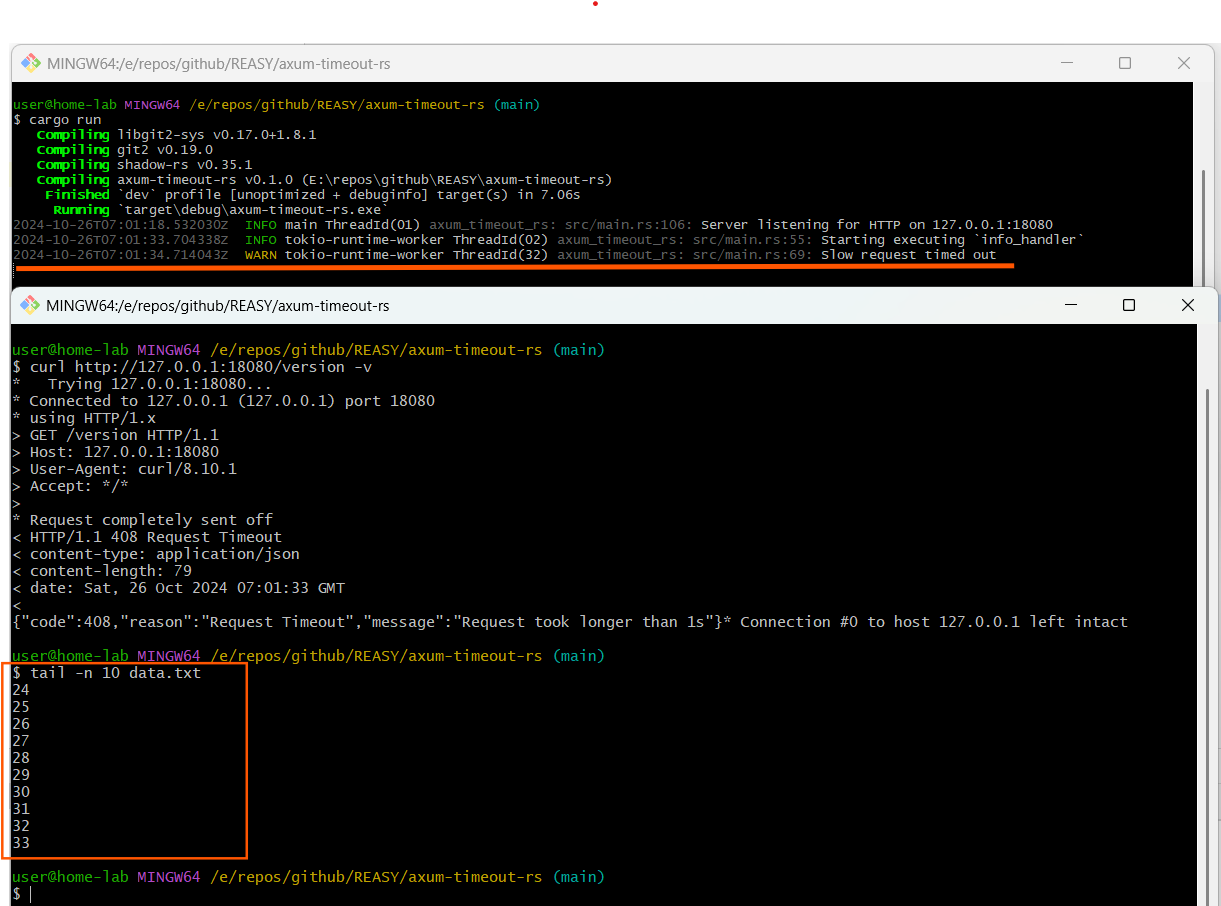

}Let's run it and send a request using curl

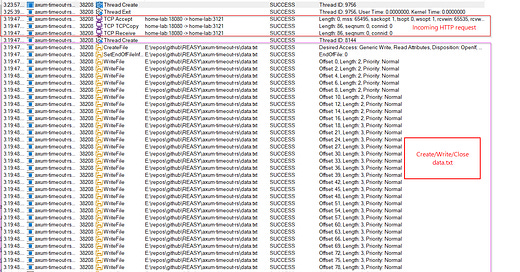

As we can see, it got timed out and the file has only partially written data (the latest number written in data.txt must be 99). Is the file closed? Do we have file handles leaked?

Tracing Timeouts

Process Monitor

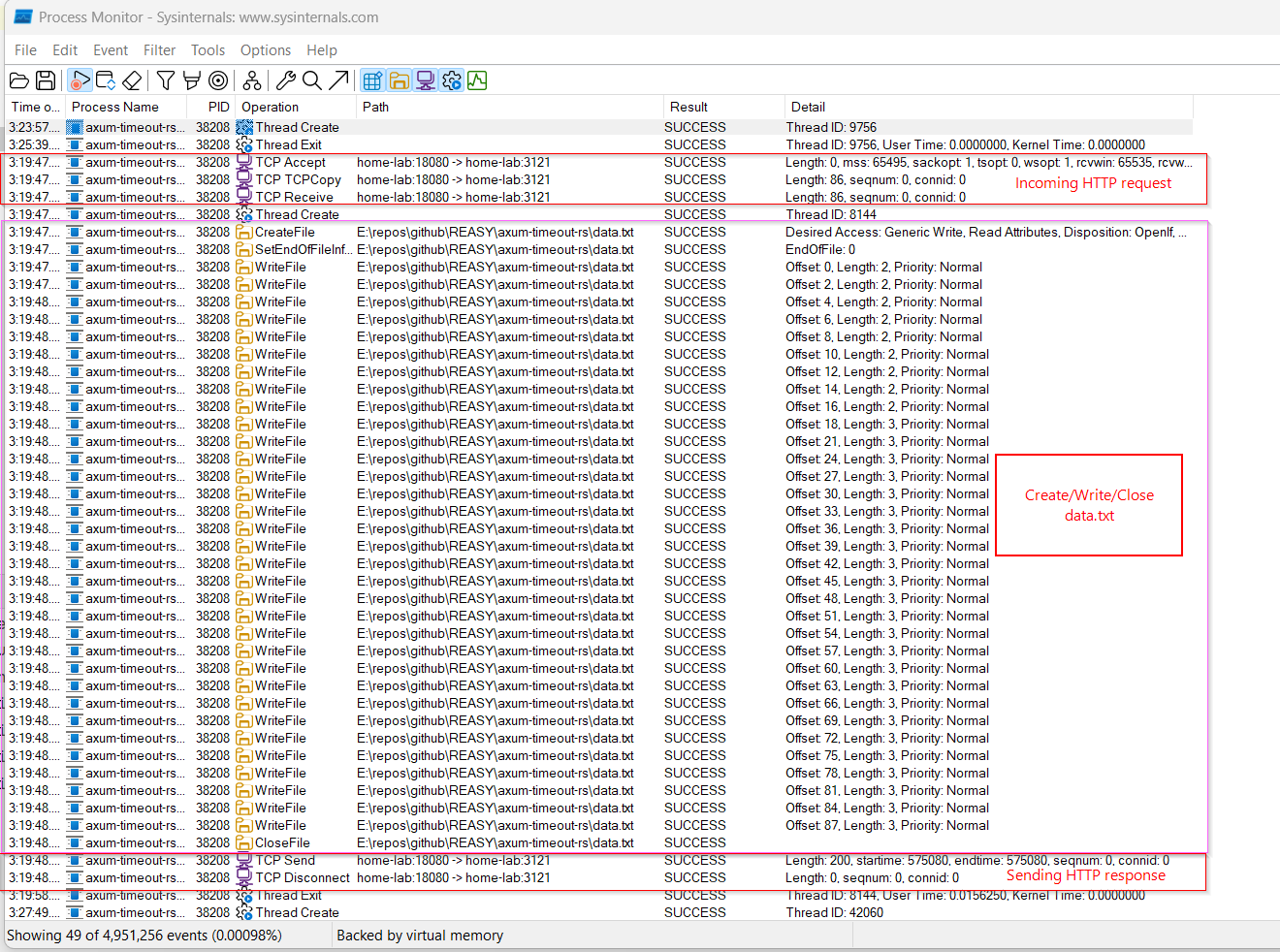

I'm on Windows 11 at the moment, so I can use Process Monitor to see what that process is doing (by filtering by Process Name is axum-timeout-rs.exe ). On Linux-based systems one can use strace.

We can see the incoming TCP connection (HTTP request), the file creation, the write operation, the file closing and the HTTP response being sent to client. OK, at least file was closed. But what closed that file?

Debugger and stack trace

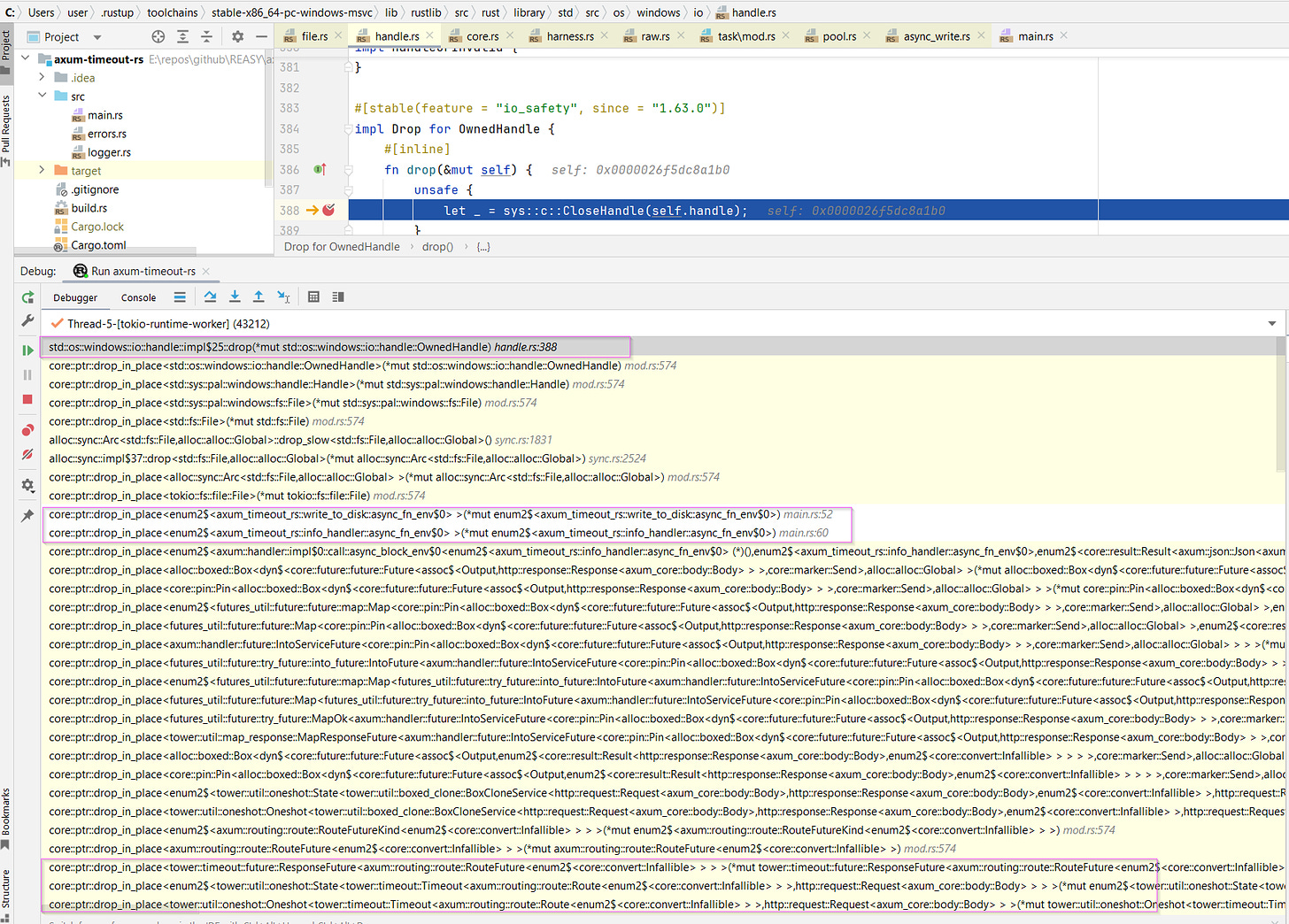

You probably know the answer - it is somehow related to Drop trait. We need to find a type in tokio::fs::File that implements Drop (and with modern IDE like IntelliJ IDEA it is quite easy), here are the types involved on Windows OS to get to it:

tokio::fs::File has

std: Arc<StdFile>field which is synonym forstd::fs::Filetypestd::fs::File has a field

inner: fs_imp::Filewhich on Windows isstd::sys::pal::windows::fs::Filestd::sys::pal::windows::fs::File has a field

handle: std::sys::pal::windows::handle::HandleHandle with a field at 0 with the type

std::os::windows::io::handle::OwnedHandleFinally we reached OwnedHandle that has Drop trait implemented

We can put a breakpoint to see the stack trace:

And below is the explanation what is actually happening:

A timeout is set on router level using ServiceBuilder

That makes Axum to wrap all routes with Timeout that implements Service that must implement poll_ready and call

That on every call creates ResponseFuture (that implements Future!) that creates two futures: the delay (sleep) and actual one

ResponseFuture races those two futures and if delay (sleep) completes first, it returns timeout

Inner future is not polled anymore, it gets out of scope which causes cascade call of drops that we see in the stack trace

Conclusion

Timeouts in Async Handling Can Cause Silent Failures

Unexpected halts in async function execution, especially without obvious error log could be due to timeouts. This behavior can be challenging to debug and may not raise direct errors, as Rust’s async model relies heavily on task completion to drive forward.

Consider Fine-Grained Control Over Timeouts

Abstractions like tokio_util::sync::CancellationToken can help with that control, however it will make code more complex

Axum’s Timeouts Are Powerful but Require Understanding

Atomic Writes Improve Reliability

A good practice for ensuring consistency during file operations is to fully write to a temporary file and then rename it as a final step (assuming this happens on the same disk). This approach provides atomicity, ensuring that if a file operation is interrupted or times out, the main file remains unchanged and consistent, which can prevent data corruption and improve reliability.

Stay curious so that you always keep learning something new

Repository with code: https://github.com/REASY/axum-timeout-rs/

Comments and suggestions are welcome! Thank you for your time.

Thanks for the post!

Why don't blocking operations(writing to a file) help here?